Python rocks!

I have been dabbling with a bit of 3D graphics programming for the last couple of days; trying my hand at exporting models designed using the free 3D modelling software Blender and getting them rendered using OpenGL. Blender supports a Python based scripting system where pretty much everything in Blender can be accessed via Python scripts. So, along the way I happened to see what Python was all about.

Exporting co-ordinates and normal vectors from Blender turned out to be fairly straightforward. The following script does a quick and dirty job of producing a file with all the numbers.

import Blender;

from Blender import *;

import Blender.Scene;

from Blender.Scene import *;

f = open( "co.csv", "w" )

scenes = Scene.Get(); # iterate thru all ze scenes

for sc in scenes:

objects = sc.getChildren(); # run thru all ze objects in this scene

for obj in objects:

if( obj.getType() != "Mesh" ): # we are interested only in meshes

continue;

print "exporting ", obj.name;

f.write( "# " + obj.name + "\n" ); # write the name of the object

# into the file; our renderer

# ignores lines starting with

# the '#' character

data = obj.getData(0, 1); # get co-ord data

for face in data.faces: # blender gives us the co-ords face-wise

#

# first we write out the normal vector for this polygon

#

f.write( "--" +

( '%f' % face.no.x ) + "," +

( '%f' % face.no.y ) + "," +

( '%f' % face.no.z ) + "\n" );

#

# now, write out all the vertices

#

for v in face.verts:

f.write( ( '%f' % v.co.x ) + "," +

( '%f' % v.co.y ) + "," +

( '%f' % v.co.z ) + "\n" )

f.close();

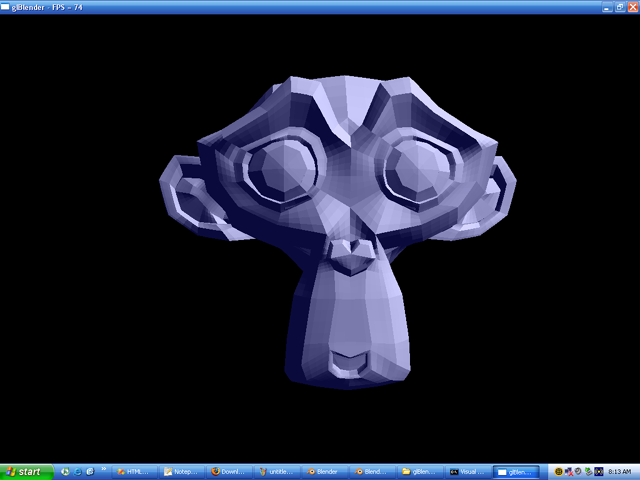

Nothing spectacular about the script really. Here's a screenshot of how Blender's default suzanne monkey model looks like in my OpenGL program.

But one problem I quickly ran into was with regard to the scale of the co-ordinates output by Blender. Sometimes Blender's data would cause the model to be rendered in gigantic proportions and I'd have to render it far into the screen to make it fit inside the window. What I really needed was a script that could post-process the co-ordinates from Blender (basically, scale them down). I figured I'll write it in Python (I could have done the scaling while exporting them from Blender of course, but where's the fun in that!) and boy was it cool, or was it cool!

Python supports this super-cool feature called "List comprehension" that allows you to succintly express operations that you want performed on elements in a collection. In my case the file containing the list of co-ordinates looked like this:

-3.448783,-1.912737,-0.861946

-3.281278,-2.116843,-0.861946

-2.328871,-1.164436,-3.555760

-2.328871,-1.164436,-3.555760

-3.573251,-1.679874,-0.861946

-3.448783,-1.912737,-0.861946

And I wanted each of those numbers scaled down by a factor. The script turned out to be remarkably short and it has elegance written all over it!

import string;

#

# open the source, dest files

#

src = open( "co.csv", "r" );

dst = open( "cos.csv", "w" );

#

# process each line

#

line = src.readline();

while( len( line ) > 0 ):

#

# process it only if it is a non-comment and non

# normal line

#

if( line[0] != "#" and line[0:2] != "--" ):

line = string.join( [ str( float( x ) * 0.25 )

for x in string.split( line, "," ) ], "," ) + "\n";

dst.write( line );

line = src.readline();

src.close();

dst.close();

Take special note of the highlighted line. Believe it or not, but that single line of code splits a line of comma delimited text, converts each resulting token into a float, multiplies it with 0.25, converts the value back into a string and then concatenates the list of converted values into a comma delimited string again! Now I know that some of you're thinking that we have traded off clarity of code for expressive power and that is true to an extent here. But it isn't anymore obtuse than, say a regular expression! I guess it boils down to personal preference at the end of the day.

I could have written 20 lines to do the same thing but I sure wouldn't be feeling as pleased as I am feeling right now! Python rocks!